Autor: Asma Zgolli and Mouna Labiadh

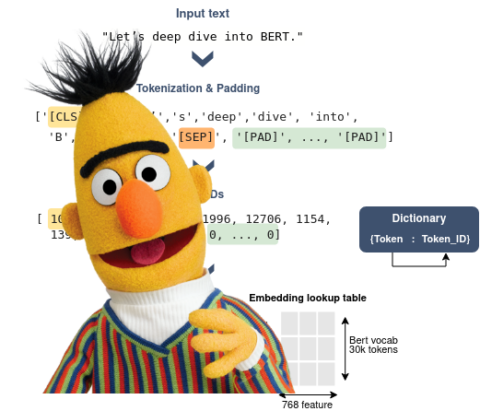

Exploring BERT: Feature extraction & Fine-tuning

Natural language processing (NLP) is a set of techniques that aim to interpret and analyze human languages. By using it in more complex pipelines, we can solve predictive analytics tasks and extract valuable insights from unstructured text data.

A major breakthrough was made in the field of NLP by the introduction of transformers, which paved the way for large language models (LLMs) and generative AI research (e.g. BERT, BART, GPT).

In this article, we walk through different concepts of NLP. In the first section, we summarize the architecture of transformers and highlight its core concepts, such as the attention mechanism. Then, in the second section, we focus on BERT, one of the most popular Transformer-based LLMs, and we present examples of how it is used in data science applications.